Spark AI: Designing a Smarter Output Experience

About Spark™ AI by ChargeLab

OVERVIEW

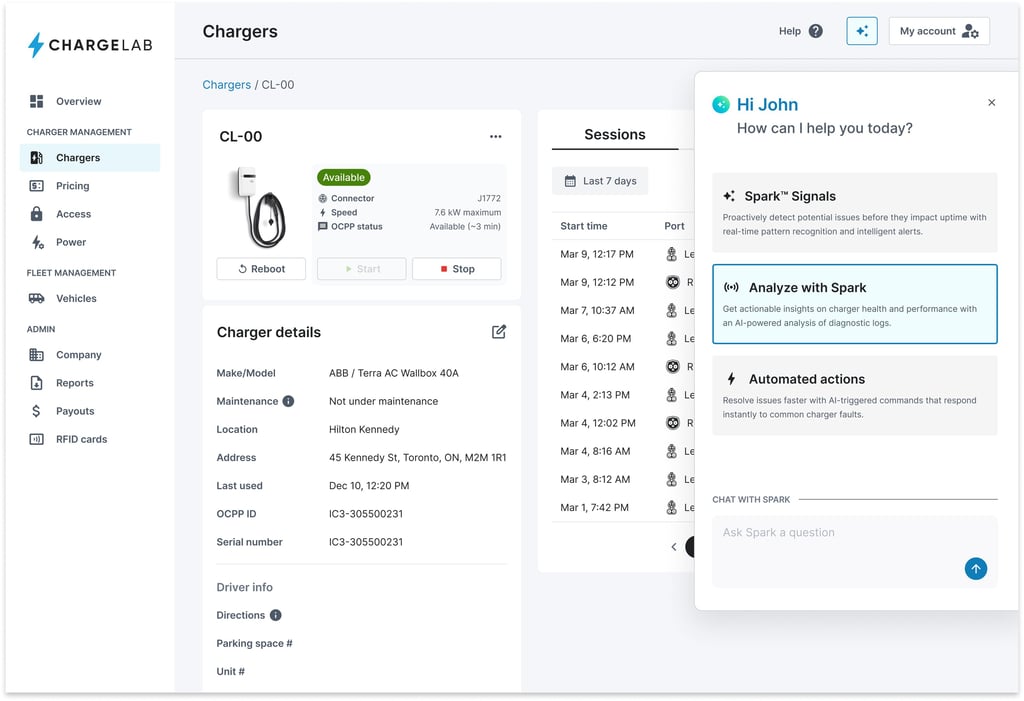

Spark™ AI is ChargeLab’s intelligent AI assistant built to simplify EV charger operations. By surfacing real-time diagnostics and recommending next-best actions, it helps site hosts and operators troubleshoot faster and with more confidence. Spark was designed to turn complex, technical data into clear, actionable insights directly within the UI.

Role

Product Designer & Researcher

Tools

Figma

Timeline

2 weeks of Research and Design, 1 weeks of Testing

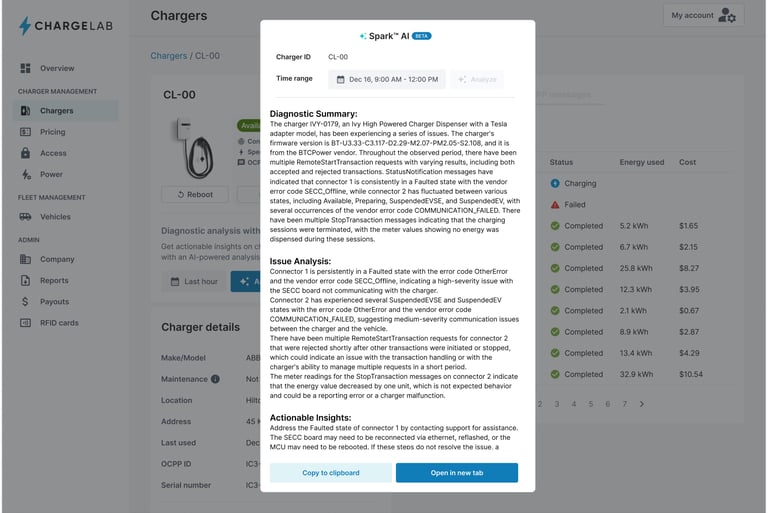

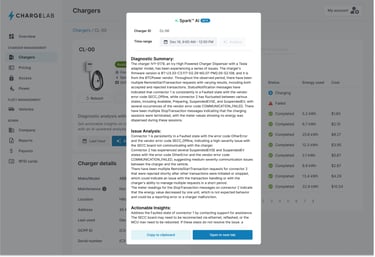

Context

The initial version of Spark™ AI provided valuable charger diagnostics but presented information in a verbose, dense format that limited usability. Technical constraints also meant the UI lacked visual clarity, reducing engagement. I was brought in to collaborate with the Spark Pod to rethink the interface—transforming raw output into a more intuitive, visually digestible experience that made key insights easier for users to act on.

Spark Version 1

Problem

While Spark™ AI was a powerful tool under the hood, its value wasn’t translating to users. The diagnostic output was often overwhelming—filled with dense HTML strings and long text blocks that were hard to parse quickly. Limitations in how the data was rendered made it difficult to prioritize or style information, which resulted in a low adoption rate among operators and support staff who needed quick, actionable insights.

Design Goals

Make Spark easier to use by simplifying how AI outputs are structured and presented

Highlight key insights at a glance through clearer visual hierarchy and grouping

Improve visual design while working within existing rendering limitations

Ensure trust and usability by making Spark feel like a native part of the product ecosystem

Collaborate cross-functionally with PMs and engineers to prototype within real constraints

Collaborate with Spark pod to test and validate designs

Solution

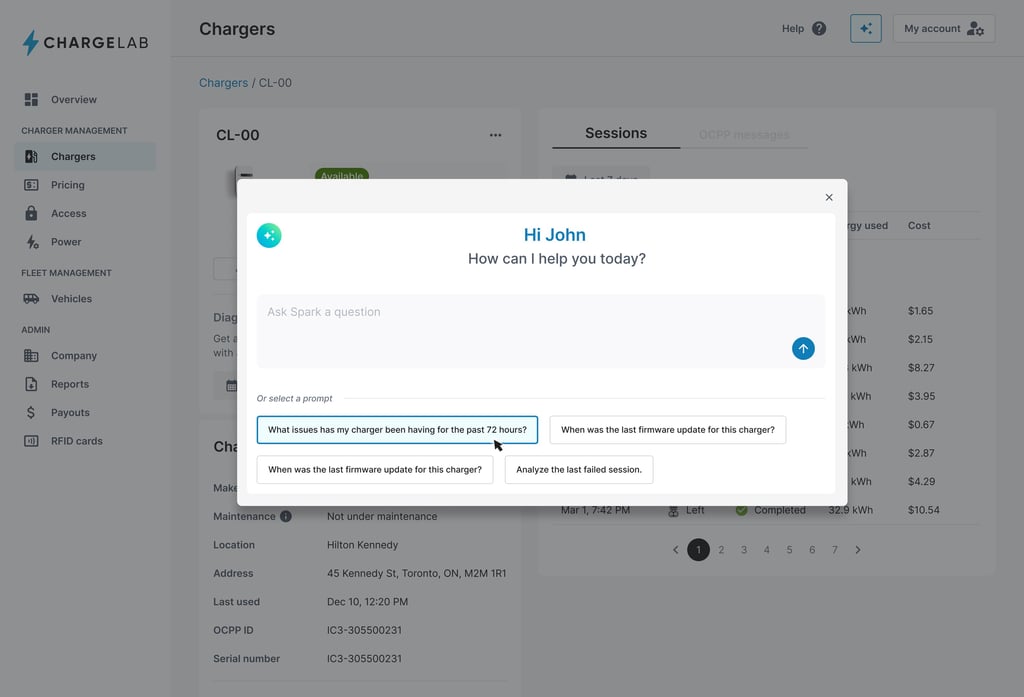

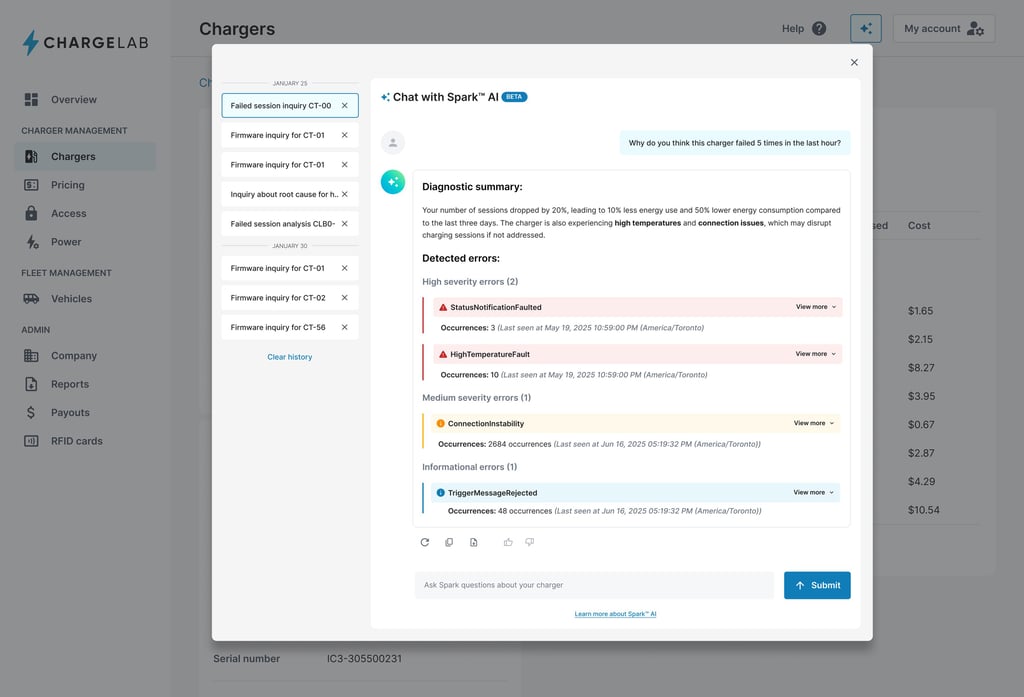

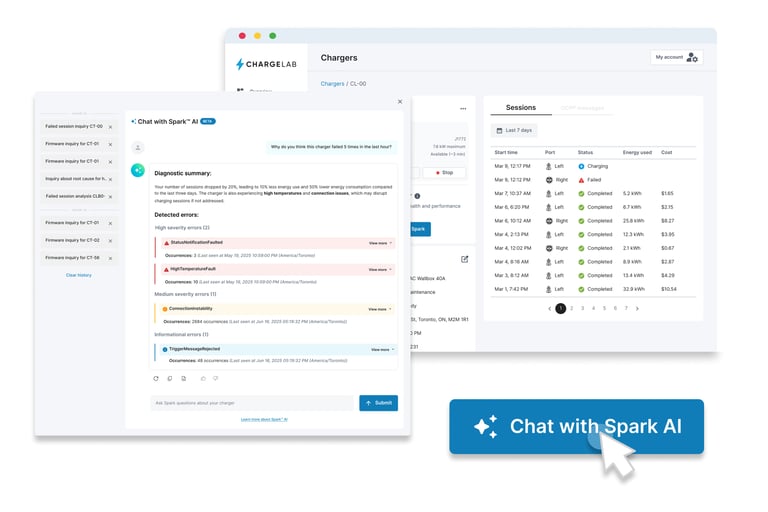

To improve usability, we reimagined Spark™ AI as a chatbot interface, aiming to create a conversational experience where users could easily access insights and guidance (though this conversational version is still in development but well underway). Meanwhile, I designed a more digestible output format for the current UI, including a color-coded alert system, a concise diagnostic summary, and a clear root cause analysis.

While the engineering team led the development of the large language model (LLM) and backend AI capabilities, my focus was on crafting the user interface and experience to make complex AI output understandable and actionable for operators.